Jul 28, 2021

Persona

Jul 28, 2021

Persona

Increasingly, colleges and universities have realized the importance of search engine optimization, as organic search has become the #1 source of prospective student website traffic.

While optimizing program pages with keywords and providing better content are critical to higher education websites, institutions can’t forget about technical SEO. In June, Google started rolling out their page experience algorithm update focusing on site performance alongside their separate core algorithm update. To prepare for these changes and prevent any drops in rankings, institutions need to enhance their sites’ technical health.

There are dozens of technical components that make up the foundation of your SEO strategy. These elements ensure your website can be indexed by search engines and provide a good user experience, thus helping your institution generate higher organic traffic and conversions. But how do you know if your website’s technical health is in good shape?

We understand that not everyone is an SEO expert, so we put together a 10-point fundamental technical SEO checklist for you to begin auditing your website. If your website has errors in the following checkpoints, it’s time to invest in a comprehensive professional audit.

1. Is your website secured with HTTPS?

HTTPS has been a ranking factor since 2014 when Google began placing a greater emphasis on enhancing security and privacy for users. Some colleges and universities may think they have this covered, but we often come across websites that still have old HTTP URLs live and indexed in Google.

If your entire website is not secured by HTTPS encryption, it not only becomes vulnerable to cyberattacks but also could prevent users from accessing your website or submitting their information via your web forms.

When prospective students visit a website that displays a “not secure” warning in the browser, they can be very hesitant to submit an RFI or even an application. What’s more, your organic search rankings can also be impacted, as Google does not wish to serve search results that could provide a negative user experience.

If your website is still on HTTP, it should be upgraded to HTTPS to provide a more secure environment to users. The HTTP version of your entire site should then be globally redirected to the HTTPS version to ensure that users can no longer access the non-secure version.

Pro tip: First, go to your website and see if the URL begins with “https” and has a lockbox icon in the browser, indicating that it’s secure. Next, try to change the URL to “http” and see whether or not the URL redirects to the https version. If not, you likely need to set up a global redirect across the entire website so that the old non-secure URLs point to the new secure ones.

Finally, all internal links on your website should be changed to the HTTPS URLs, even if the old URLs have a redirect. This is because Google can read the non-secure URL in the code of the page, which can impact your search rankings. What’s more, these HTTP URLs create a vulnerability and leave a web page open to man-in-the-middle attacks.

2. Are there duplicate versions of your website live simultaneously?

One thing that many higher education institutions neglect to address is having multiple versions of their website live simultaneously. To build off point #1 on our checklist, when moving your website to HTTPS, you must redirect the entire HTTP version of the website to the new secure domain; otherwise, the entire website will live in two places. Other common duplicate website versions include www. and non-www. They could look like this:

- https://sampleuniversity.edu

- http://sampleuniversity.edu

- https://www.sampleuniversity.edu

- http://www.sampleuniversity.edu

When you access these different versions of your website, they might look the same on the front end, but they’re considered four different websites by search engines. You should only have one version indexed on Google; otherwise, it could lead to duplicate content and hurt your website’s organic traffic and ranking.

Pro tip: How do you know if your website has multiple versions live? Go to the website and click on the address bar in the browser. Change the URL to other versions and see if that automatically redirects you back to the primary website version. If it doesn’t, you should globally redirect all other website versions to the primary version.

3. Does your website suffer from duplicate content?

Duplicate content is one of the most common issues on higher education websites. When two or more pages have identical or nearly identical content, Google gets confused, as it’s not sure which version of the page is correct. As a result, the page you want to appear in search results might not rank, and organic traffic may be split between the duplicate pages.

Pro tip: We often see duplication happen between program pages and catalog pages on higher ed websites. You should check if the content of your program pages is almost exactly the same as the corresponding catalog page. If it is, the front-facing program pages may not be indexed correctly as the authoritative content. The program page should be more marketing-centric, whereas the catalog page should focus more on the technical requirements of the program.

Besides program pages, event pages often suffer from duplicate content as well. For recurring events such as annual conferences, a common pitfall is creating multiple identical pages that contain the same information except for the event dates. To avoid having duplicate event pages, a better solution is to keep the same URL for the recurring event and update the information when necessary. By building an evergreen page, your event URL can accumulate page authority over time and have better visibility.

4. Do you have a robots.txt file?

Your website’s robots.txt is a text file that instructs web crawlers—including search engine crawlers—how to crawl pages on your website. Search engines use your robots.txt file mainly for discovering and indexing content to show on the search results page.

Having a robots.txt file can help you advise search engines on what parts of the website to crawl and what parts of the website not to crawl. Most commonly, higher education websites can use the robots.txt file to keep certain sections of their website private from the SERP, such as:

- Internal domains for staff and students

- Student portals that require logins

- Outdated web pages that can’t be immediately removed

- Staging sites

For large higher education websites, listing disallowed folder paths in the robots.txt file can increase the crawl budget, but be careful not to accidentally disallow Googlebot from crawling your important URLs or even the entire site! This could jeopardize your website’s organic traffic.

Pro tip: If you’re not sure whether your website has a robots.txt file in place, you can simply add /robots.txt to the end of the root domain URL: https://sampleuniversity.edu/robots.txt. If your website doesn’t have a robots.txt file, you may want to contact your webmaster to create one for you.

Be sure to check if your robots.txt file is accidentally blocking any important folder paths or URLs from being crawled by search engines. It could look like this in your robots.txt file:

User-agent: Googlebot Disallow: /example-subfolder/

User-agent: Googlebot Disallow: /example-subfolder/page.html

If the folder or URL isn’t intended to be hidden, you should remove the line from your robots.txt file so it can be discovered by search engines.

5. Does an XML sitemap exist?

An XML sitemap file outlines your website’s information architecture and helps Google easily crawl and index your web pages. For this reason, all the pages that you want to show up in search results should be included in the XML sitemap. However, no-index URLs such as internal resources and landing pages dedicated to PPC campaigns should not be included in your XML sitemap in order to save your crawl budget and ensure that this type of content is only reaching its intended audience.

Pro tip: While XML sitemaps can exist under any URL or domain, one of the most common sitemap URLs is /sitemap.xml. To check if your website has an XML sitemap, add “/sitemap.xml” at the end of your institution’s domain URL, and it should show the sitemap in the browser: e.g., sampleuniversity.edu/sitemap.xml. If your XML sitemap isn’t /sitemap.xml, it’s recommended to list the sitemap location in the robots.txt so search engines can easily find your sitemap.

If your website doesn’t have a sitemap, you can create one through your content management system, and don’t forget to submit your XML sitemap to Google Search Console! That way, you can help Google speed up the process of crawling and indexing your website. If you’ve made significant changes to your website such as a redesign or redevelopment, it’s good practice to resubmit the new sitemap to Search Console. After submitting your XML sitemap, Search Console can identify issues in the Sitemap report and discover errors and warnings with the submitted URLs in the Coverage report.

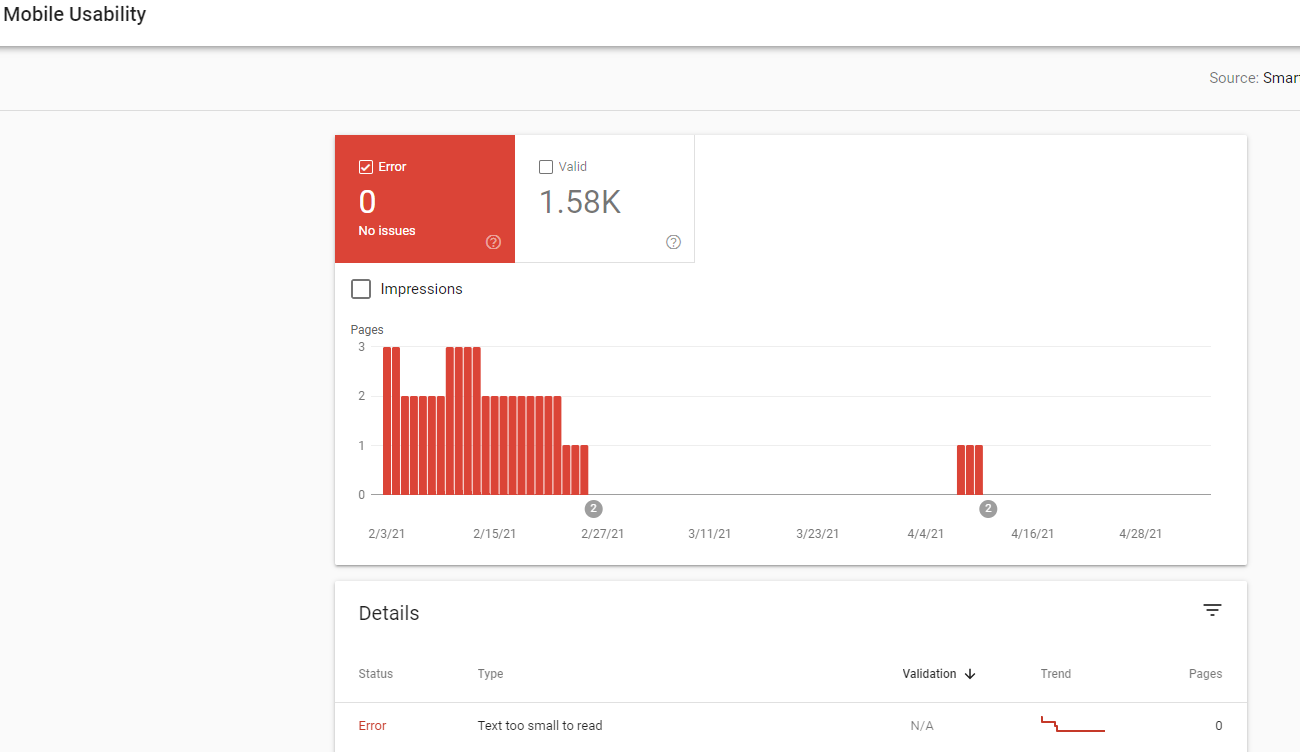

6. Is your website mobile-friendly?

Do you know that in the first quarter of 2021, mobile devices accounted for 59% of organic visits from search engines? With that being said, many of your prospective students are doing their college research on mobile phones. A website that’s not mobile-friendly often can’t fit well into the viewport of the small mobile screen, which makes it hard for users to read, or has poor interactivity, such as clickable links being too close together.

In addition, Google utilizes a mobile-first index, which means your website’s mobile experience takes precedence over the desktop version. Therefore, Google tends to rank mobile-friendly websites higher in the SERP. This is something Google is cracking down on even more this year with its Core Web Vitals update, placing an emphasis on interactivity and page speed. Accordingly, if you want to capture more traffic, ensuring that your website is mobile responsive is key.

Pro tip: Not sure if your institution’s website is mobile-friendly? You can utilize Google’s Mobile Friendly Test tool to see how specific URLs on your website measure up! You can also use Google Search Console’s Mobile Usability report to identify errors on specific pages.

(caption: Mobile Usability report on Google Search Console)

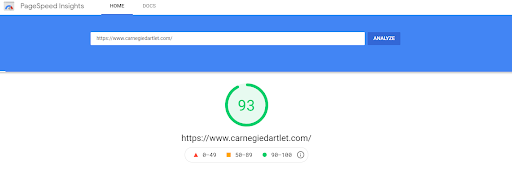

7. Does the website pass Google’s page speed test?

Colleges and universities need to make sure their websites load fast. If your website takes more than three seconds to load, almost half your visitors will leave. In other words, slow page speed negatively affects user experience and endangers your website traffic.

In addition, as Google is rolling out a Page Experience algorithm update, page experience is becoming one of the important ranking factors. Google assesses page experience using Core Web Vitals, which include three page speed and interaction measurements: Largest Contentful Paint, First Input Delay, and Cumulative Layout Shift.

Pro tip: Google offers a PageSpeed Insights tool to help website owners understand their page speed performance on both desktop and mobile. You can utilize this tool to evaluate your institution’s page speed and see if it passes Google’s assessment. The tool also gives helpful diagnostic insights into improving your page speed.

The Core Web Vitals report in Google Search Console is also a good resource for improving site performance. In this report, Google flags the URLs on your website that are affected by specific issues. After addressing your Core Web Vitals issues, you can validate your fixes on Search Console to have Google recrawl your website.

(caption: PageSpeed Insights shows the Carnegie website received a speed score of 93.)

8. Are your URLs well structured?

When creating web pages for your college or university, do you pay attention to the URLs? It’s important that URLs are well structured and appropriately categorized. SEO-friendly URLs help search engines understand your website structure and page content while aiding them in crawling all your site’s web pages.

Pro tip: SEO-friendly URLs should be short and descriptive and include relevant keywords. You should also check if your URLs are categorized by the correct folders or subfolders. For example, instead of naming an MBA program page’s URL one path down like sampleuniversity.edu/mba, a well-categorized URL should look like this: sampleuniversity.edu/academics/graduate-programs/mba. Essentially, the URL needs to reflect where in the site architecture the page lives.

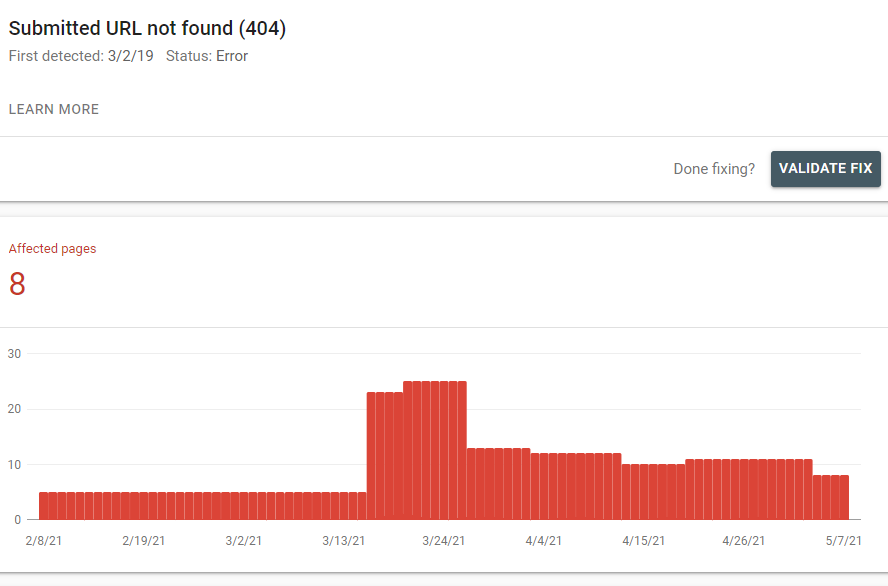

9. Does the website have 404 errors?

It’s not uncommon to see 404 errors and broken links on higher ed websites. For example, when a program is retired, all the subsequent web pages related to this program might be deleted, causing users who try to access that web page to land on a 404 error page. Even after a page has been deleted, links to that page linger across the web. Search engines often continue to have those pages indexed for a period of time, and both internal and external links often still point to the deleted pages as well. When students land on a 404 page, they can become frustrated by these non-existent pages and move on to other college websites.

Broken links and 404 errors give rise to bad user experience. Google could rank your pages lower thinking that your site is untrustworthy and poorly maintained. In short, 404 errors hurt your search engine visibility.

Pro Tip: You can utilize the Coverage report in Google Search Console to find the URLs leading to 404 errors on your site. For any broken links, you’ll want to set up 301 redirects to relevant live pages. This ensures that users can find similar information to what they were looking for. Furthermore, a 301 redirect passes the link equity of the deleted page onto the page it redirects to. Since a page’s age is a big search engine ranking factor, this can be particularly valuable for older pages that get deleted.

(caption: Google Search Console’s Coverage report finds eight pages affected by 404 errors.)

10. Are your web pages thin on content?

Research has shown that long-form content consistently ranks higher on search engines and the average Google first page result contains 1,447 words. However, when examining word count, quality matters. Users prefer to read helpful content that can answer their questions, so any added content should be specific and relevant to their needs. Content that’s short and doesn’t address any user questions is considered low value by search engines and prospective students alike.

You can discover the thin content issue through your web pages’ text-to-HTML ratio, which indicates the amount of actual text you have on your web page compared to the amount of code. If your page is heavy on code but thin on text, then your text-to-HTML ratio would be low. Search engines use this ratio to determine the relevancy of your pages. A low text-to-HTML ratio indicates lower relevancy and can inhibit your page’s ranking performance. When a page’s text-to-HTML ratio is lower than 10%, it could be flagged as an issue by most SEO tools.

Pro tip: There are several ways to fix thin content. You could conduct keyword research to understand your students’ search intent and what they’re looking for on a specific page. After your keyword research, you can rewrite the page to provide more helpful information that can address their needs.

You may also look at the thin pages that cover similar or identical topics. You may consider consolidating them into one page that contains comprehensive information for users.

In addition, for some outdated pages that are thin on content and generate little to zero traffic to your website, it’s recommended to re-evaluate the importance of these pages. You may want to just remove these low-value pages to optimize your crawl budget.

If you have a low text-to-HTML ratio, besides adding more content to your pages, you also need to examine the efficiency of your code, remove any unnecessary HTML, and avoid using inline CSS and Javascript.

Ensure that your higher education website is in good health with a technical SEO audit

It’s important to note that the top 10 technical SEO areas listed here only scratch the surface—there are dozens of factors that go into Google’s ranking algorithm. This list is designed to give you some key areas to work on, but a full technical site audit will pinpoint every issue affecting your site’s search engine visibility.

At Carnegie, we consult with colleges and universities on all the unique areas of improvement for their specific websites, creating an action plan for their internal web teams to execute.

If you’re interested in an in-depth technical SEO audit or need help fixing your site’s technical issues, contact us today!